About me

Intro

Hi, I’m Barry Ugochukwu, a data scientist and a technical writer with over three years of experience in both fields. I have a passion for finding insights from data and communicating them effectively to various audiences. I have worked on projects involving data analysis, machine learning, natural language processing, data visualization, and more. I have also written articles, tutorials, reports, and documentation for various platforms and publications.

Some of the tools and technologies that I use include Python , R , SQL , JupyterLab, Kubernete, Docker, GitHub , Watson Studio , Power BI etc. I’m always eager to learn new skills and explore new challenges in the field of data science.

In this portfolio, you will find some of my work as a data scientist and a technical writer. You will see how I approach different problems using data science methods and tools and how I document my process and code using clear and concise language.

I hope you enjoy browsing through my portfolio. If you have any questions or feedback , please feel free to contact me 😊

Two of my past projects:

Automating extraction of athletes mentions from linkedIn and calculating the sentiment scores

Automating extraction of athletes mentions from Twitter and calculating the sentiment scores

My Present project:

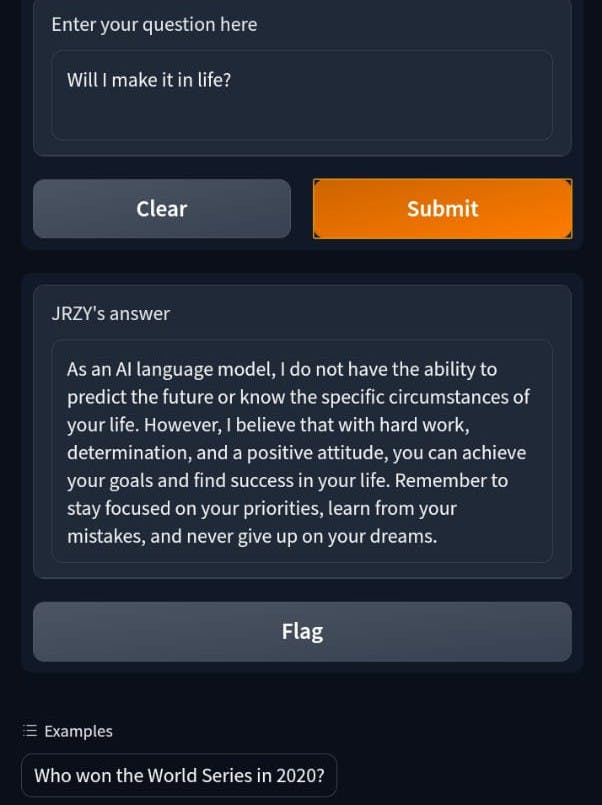

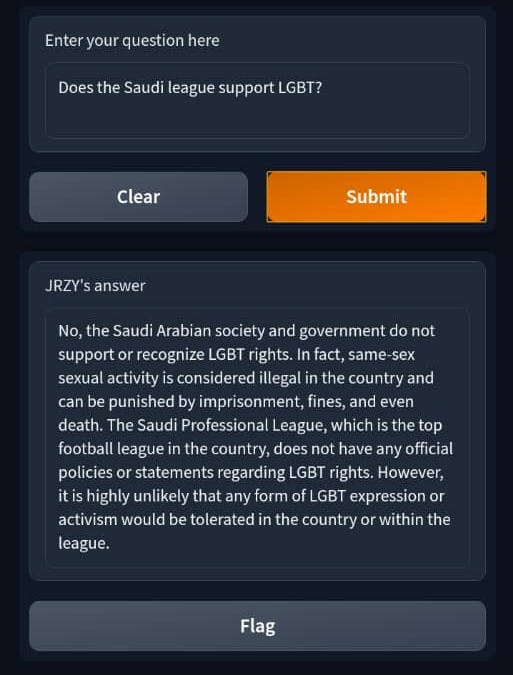

A state-of-the-art chatbot built with gpt-3-turbo

I'm using Python and OpenAI’s API to connect to the gpt-3-turbo model and send user inputs and receive responses. I will also used Flask to create a web interface for the chatbot that allows users to interact with it through text or voice input. I will use SpeechRecognition and pyttsx3 libraries to enable speech recognition and text-to-speech features for the chatbot.

The chatbot will keeps track of the conversation history and context using a list of dictionaries called message_log.

The main features of this project will be:

1. A state-of-the-art chatbot that uses the gpt-3-turbo model to generate natural and engaging conversations with users.

2. A web interface that allows users to interact with the chatbot through text or voice input.

3. Speech recognition and text-to-speech features for the chatbot using SpeechRecognition and pyttsx3 libraries.

4. Conversation history and context management using message_log.

This project will demonstrates my skills in natural language processing , web development , speech processing , and API integration . It also shows my interest in building conversational agents using cutting-edge technologies . The repository for this is not public yet but this will give you an idea of exactly what i'm trying to build. The image below shows the test of my chatbot with a gradio interface. Note that I've not integrated the speech recognition and text-to-speech feature in this image.

My Future Projects:

Automated crop monitoring using machine learning algorithms to detect signs of pests and disease in plants.

Personalized Tax and Credit Optimization Platform using Python and Machine Learning.

My blog

Yeah I have a blog where I write on various topics related to ML and it's application, programming and development tools like Python, APIs, DevOps, AutoML and more.

Check out my blog at Write ML Code

Before starting my blog, I have created contents for Walmart host, Workforce development solutions, Dast, and more...

You can request for my resume to view the details of my work for them

Thank you.

Thanks for going through my portfolio, I really hope you enjoyed the projects you saw there. I am always available for more interesting projects that challenge me and allow me to use my skills and creativity.

Follow me on Twitter